Deleting a Large Number of Records in SQL Tables

Why executing a single DELETE query is bad?

If someone tells you to delete all expired data based on the data in the "Expire_Time" column, the first option that comes to your mind is to execute a DELETE query with a WHERE clause like below.

But if your table has a large number of expired rows, this is the worst method to delete them. Obviously good for deleting a few rows ;) Let's discuss what cause it to be the worst method to delete a large number of rows in a SQL table.

- Updating or deleting a large amount of rows in MySQL will create locks that will paralyze other queries running in parallel. The lock mechanism is used by the database engine to ensure data integrity when multiple users want to access the same data at the same time. During a delete operation, the lock manager locks the necessary objects and the taken lock types depend on the isolation level of the transactions.

- Deleting millions of rows without enough transaction log space can cause all sorts of problems in trying to complete the deletion job. Most databases are sized with enough disk space to manage the cross-section of DML (Data Manipulate Language) activity on the database. If you attempt to delete a large number of rows in one transaction, then the DELETE job will fill the transaction log until the DELETE is committed. There are plenty of workarounds to the transaction log growing and filling the disks. You can provision extra disk or create extra transaction log files. But you may find these steps don’t solve the problem.

Anyway, if you try the above query on a table with millions of expired rows, the server will hang for hours with no indication of how much progress it had made.

What is the best method to delete a large number of rows in a SQL table?

As far as I know, the best method to delete millions of rows in a SQL table is to complete the DELETE in batches. By deleting in batches, the job is using smaller amounts of log space, committing and flushing to disk. You can keep reusing the same disk space for logs by setting the DB in BULK-LOGGED. The following MS SQL Server query shows a very simple example for batch wise deleting rows in a table.This MS SQL Server query performs the following 5 steps.

- Set a cutoff date (only records from before this date will be deleted)

- Loop until all records from prior to the cutoff date have been deleted

- Delete the oldest 10000 records from the table

- Info log to show the progress

- Go back to step 2

One of the advantages of this method is you can cancel query execution anytime. Then the execution can continue from where it left off anytime. Keeping a backup table for deleted rows is always a wise idea💡. You can delete the backup table before starting the next data cleaning process to make it ready for the next backup after confirming that the previous data cleaning process didn't remove any required data.

Note: If your DB has expired data rows that should be deleted, that means you will get more expired rows in the future also. As a best practice, you can write a stored procedure to clean expired data and schedule to run that job weekly or monthly when the table is created if you expect expired data in that table.

Sample MS SQL Server query to clean expired data chunk wise and batch wise

Let's check a data cleanup stored procedure, I wrote in TSQL (Transact Structured Query Language) to clean expired JWT IDs in IDN_OIDC_JTI table of WSO2 Identity Server. The related issue for this cleanup query is IDN_OIDC_JTI Table is Growing. Read the issue first because it will help you to understand the code better. Click here to view that open source data cleanup stored procedure, I wrote in TSQL. Go through it carefully and then we can discuss what it does here step by step.First, it declares some variables and set values for them. Some important variables are

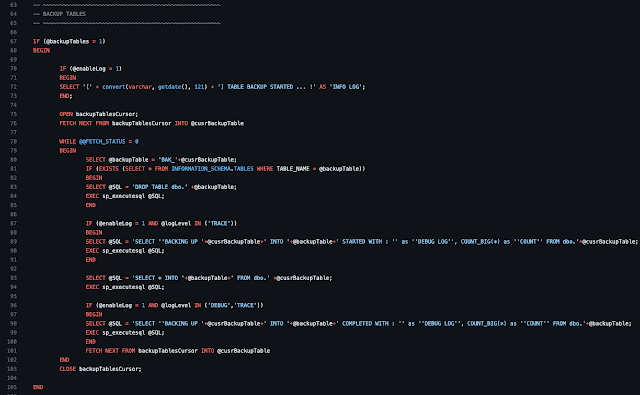

Then the backup process starts. The WHILE loop "WHILE @@FETCH_STATUS = 0" iterates as long as the previous fetch operation, that used to fetch the next value from the @backupTablesCursor, was successful. Inside the loop, the script performs table backup operations. First, it checks if a backup table already exists using the table name obtained from @cusrBackupTable. If the backup table exists, it generates a DROP TABLE statement to remove it. Following that, the script generates a dynamic SQL statement (@SQL) to create the backup table using the SELECT INTO statement. It copies all the data from the original table (@cusrBackupTable) into the backup table (@backupTable). After that backup process is completed, the query "FETCH NEXT FROM backupTablesCursor INTO @cusrBackupTable" is called again to fetch the next table name before ending the loop and tring to continue the loop. Once the loop finishes, it closes the cursor.

- chunkSize - The no of rows for each chunk which will be divided into batches later.

- batchSize - The no of rows for each batch to avoid table locks.

- checkCount - To terminate the cleanup process if the last chunk's row count is less than this checkCount value.

- backupTables - To indicate whether we need to backup the cleaned data or not.

- sleepTime - The time duration for a sleep after each batch deletion to avoid table locks.

- enableAudit - To indicate whether we need to keep track of all the deleted tokens.

Then we come to the audit log table creation section. It checks whether a table named "AUDITLOG_IDN_OIDC_JTI_CLEANUP" exists in the database. If it doesn't exist, it will create an audit log table by using the query "Select * into dbo.AUDITLOG_IDN_OIDC_JTI_CLEANUP from dbo.IDN_OIDC_JTI where 1=2;". However, the condition "where 1=2" ensures that no data is copied into the new table.

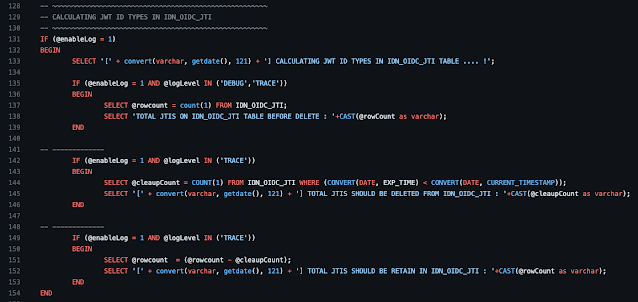

Then, it calculates the total JWT IDs currently in the "IDN_OIDC_JTI" table. Then it shows the number of rows that should be deleted after the cleanup process and the number of rows that should be retained after the cleanup process.

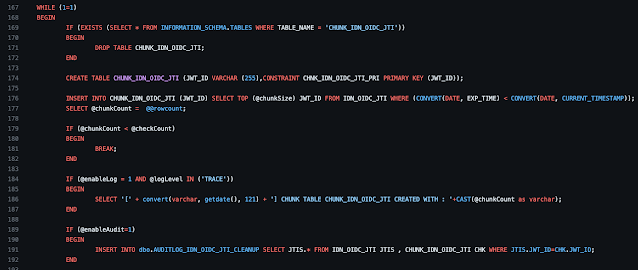

Now we come to the most important part of the query which is batch wise data cleanup part. The process starts with an infinite loop that breaks if the row count of the current chunk (@chunkSize) is less than the check count (@checkCount). Inside the loop, the query checks whether a table named "CHUNK_IDN_OIDC_JTI" exists and deletes the table if it exists. After dropping the table (if it existed), a new table named "CHUNK_IDN_OIDC_JTI" is created with a single column named "JWT_ID". Then we populate the "CHUNK_IDN_OIDC_JTI" table with a specified number of expired records (@chunkSize) from the "IDN_OIDC_JTI" table based on the "EXP_TIME" column.

Inside the outer loop, there is another infinite loop to process data in batches within each chunk. This infinite loop breaks if the row count of the current batch equals 0. Similar to the previous chunk creation part, the query checks if a table named "BATCH_IDN_OIDC_JTI" exists and drop that table if it exists. Then a new table named "BATCH_IDN_OIDC_JTI" is created with a single column named "JWT_ID". Then we populate the "BATCH_IDN_OIDC_JTI" table with a specified number of records (@batchSize) from the "CHUNK_IDN_OIDC_JTI" table. If the "BATCH_IDN_OIDC_JTI" table's row count (@batchCount) is greater than 0, we remove records from the "IDN_OIDC_JTI" table where the "JWT_ID" matches the values retrieved from the "BATCH_IDN_OIDC_JTI" table which contains the IDs of the current batch. Then we execute another DELETE query to remove corresponding records from the 'CHUNK_IDN_OIDC_JTI' table also. Then we give a relaxation period to the DB server😜 by using a delay with the @sleepTime that we defined earlier. The process then continues with the next iteration of the inner loop to perform batch deletions until there are no more records to process.

Restore the cleaned data by using the backup table

If something went wrong, we can terminate the stored procedure execution and restore all the cleaned data by using the"BAK_IDN_OIDC_JTI" table we created. I'm not going to explain the code since I hope you can read and understand a TSQL query now by yourselves. So... click here to view the data restoration stored procedure, I wrote to restore the data from the above created backup table.

That's all for today. Hope you learned something important for your software engineering or DB administration career. Ask any question you got and share your concerns, thoughts, and ideas about the content in this article as a comment below. Happy coding!

0 Comments

Post a Comment